When Maxar’s CTO said we should have all our satellite data in the cloud back in 2014, many looked at him funny. All these years it had been about avoiding clouds in our satellite imagery data. Confusing.A bit over a year ago, in collaboration with our AWS friends, things got real. The AWS Snowmobile brought its exabyte-scale capacity in a tractor-trailer to our headquarters in Colorado (circled in the image below) and we pushed our entire 18–year imagery library to the world’s most dynamic cloud infrastructure and technology community. We announced we were all-in on AWS in November 2017. Game on. We moved to where a majority of our users work. We don’t spend time building tech we can get from our AWS infrastructure. We focus on serving our customers. The CTO wasn’t crazy.

On the occasion of AWS’s Annual Public Sector Summit this week, it seemed appropriate to provide a brief update on what we’ve been up to since we trucked the full constellation’s 100PB historical pixel harvest to the cloud.Undifferentiated geospatial heavy lifting is what we do. And depending on the customer, this can mean a lot of different things. To boil it down, we will focus on three areas – SPACE, MAPS, and ACCESS - to explain a bit more of what’s going on at DigitalGlobe:

SPACE

- We perform a considerable number of operational tasks across our mission system architecture. Hundreds of imaging operations a day, terabytes of mission data coming off of our high-gain antennas, and more than 70 terabytes of image formation and production per day all happening against performance SLAs that are serving key partners and customers around the globe.

- We are migrating the entire sensor image processing streams to the cloud, leveraging the cloud-centric ground system architecture we deployed to support the WorldView-4 mission in 2016.

- This quarter, we activated our “firehose” pipeline, staging our imagery in a cloud-optimized format for faster access and consumption, taking advantage of the scalability of our new architecture to efficiently fuel our downstream data processing, exploitation, and dissemination environments. By the end of the year, we will have our entire constellation’s imagery pumping through the firehose, dramatically reducing the timelines for downstream applications and eliminating the need for “processing orders” to be placed in our mission ground segment. Given the volumes of data and compute required, this was not possible with our legacy data center architecture.

MAPS

- The amount of data coming off the firehose pipeline is intimidating for the customers in many cases. Very few insist on getting hundreds of terabytes of pixels to go with their own maps. Our product managers, imagery experts, and software teams are leveraging the cloud and the collocation of compute and storage to build customer-ready content that aligns with the user’s diverse use cases and mission needs.

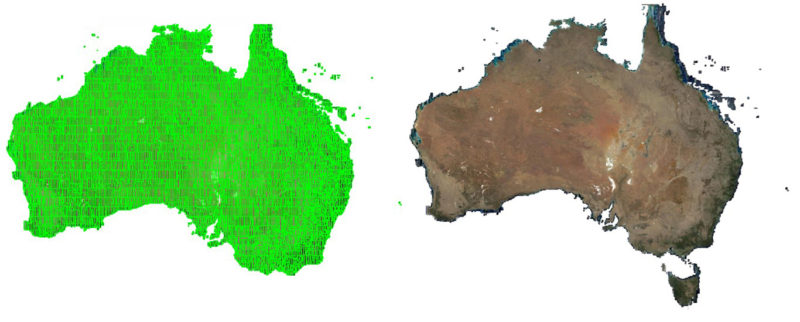

- Our mosaic engines produce high-accuracy, very high-resolution, tonally balanced, consistent bulk geospatial datasets at continent-scale to fuel customer platforms and our subscription products. These access technologies serve our SecureWatch and GBDX users Maxar content as well as partner-provided data that we source, condition, and curate in our content management segment, like MDA (another Maxar company) RADARSAT-2 imagery, KOMPSAT high-resolution imagery, elevation and ground control data from various suppliers, and a variety of open source image feeds from ESA, NASA, and NOAA.

- Sometimes the least visible things are the most important. Imagine if Netflix didn’t know who owns the rights to Star Trek – what would the legal mess be like if they started broadcasting those episodes without licensing controls? The same battle for correct licensing of data happens in the geospatial world. With the volume of data we process, Maxar maintains a robust content discovery, management, and control capability. We lean heavily on AWS tech like Amazon S3, Amazon Glacier, and Amazon SageMaker to lifecycle, protect, catalog, and provision the content for the business.

ACCESS

- Many user personas need interfaces that are more discrete and interactive in nature, requiring us to also maintain access solutions that support highly flexible and iterative interaction with the cloud.

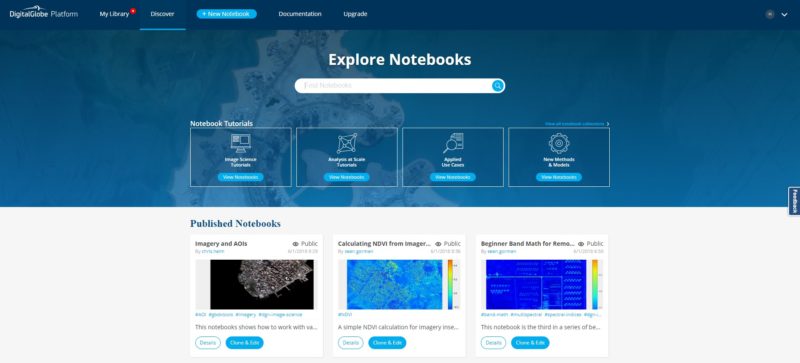

- Our May 2018 launch of GBDX Notebooks and our ever-expanding online content subscription products, like SecureWatch and Maps API, support highly iterative workflows that require us to maintain auto-scalable systems in anticipation of usage spikes across the globe.

- In the GBDX context, our cloud implementation allows developers to test their data science code on discrete locations for validation and maturation. They can then seamlessly push the task to run on content stacks that are levels of magnitude greater than possible otherwise.

The commercialization of space is in the news a lot these days. At Maxar we’re doing the heavy lifting across the entire geospatial data value chain to help our customers focus on their core mission needs.We look forward to the opportunity to connect, visit, and share our experience and plans at the AWS Public Sector Summit and during the Earth & Space on AWS Pre-Day sessions today. We are presenting “Boulders from Space” during the Spotlight Track of the Pre-Day Summit and Nate Ricklin, Director of Engineering, will be presenting about Maxar’s use of Amazon SageMaker on June 21st at 9 a.m. in Room 150 AB.