- Data Labeling: Teach the computer how to identify objects. This aspect of machine learning is paramount to achieving high levels of accuracy in the end result sets.

- Neural Nets: The core algorithms behind deep learning. There are many available, and more seem to be popping up every day. Google, Microsoft, Amazon and academia are investing millions into their respective projects to find the smartest and fastest way to answer questions. After all, time is money! By looking at the average salary from these companies on Glassdoor and the amount of committers they have listed on GitHub, we estimate there is approximately $50 million invested in the development of these open source projects. Google seems to have taken the lead with the most APIs, examples and documentation.

- Compute: The GPU hardware needed to both train and inference. NVIDIA is the world leader in the hardware behind this movement and has spent a lot of resources developing it simply based on market demand.

- Program of Record Integration: Use the output from these algorithms to alert, analyze and provide a common operating picture.

- User Engagement: Provide a solid mechanism to allow end users to achieve their objectives. We have an infinite amount of possibilities.

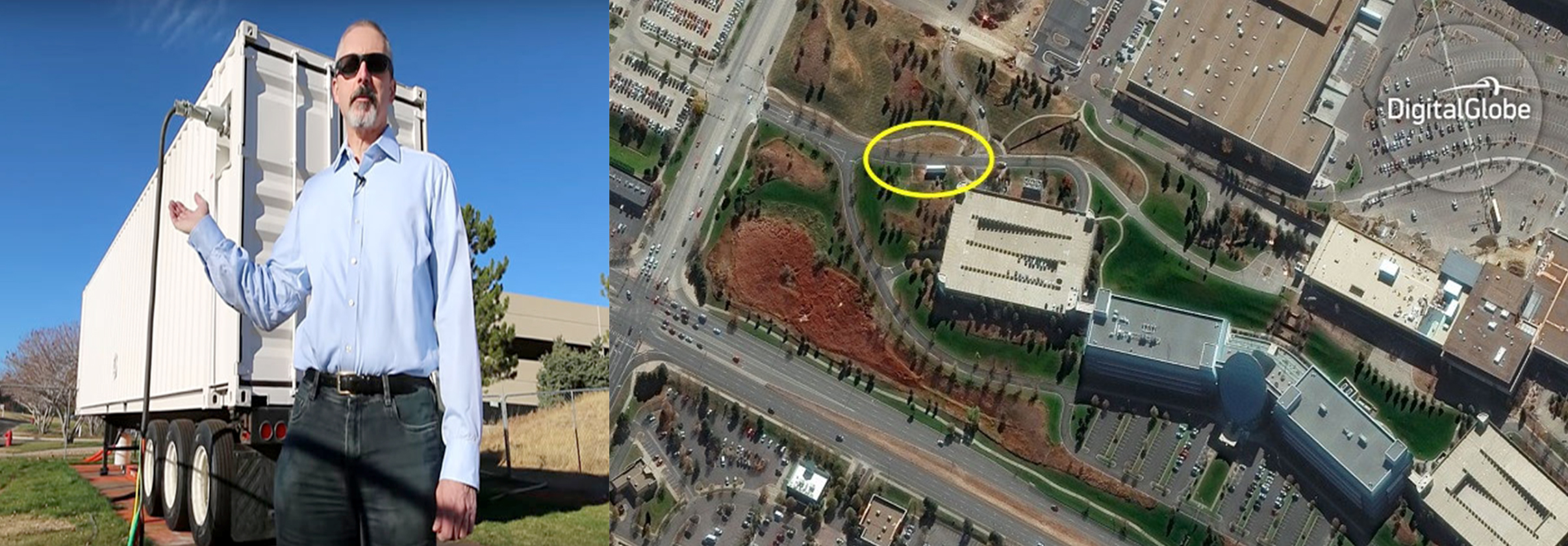

Left: Dr. Walter Scott with the AWS Snowmobile that carried DigitalGlobe’s data to the cloud. Right: The AWS Snowmobile at DigitalGlobe’s headquarters in Westminster, CO, as seen from space by WorldView-3.[/caption]

It takes a tractor trailer truck to move our data. We are the first customer to use the AWS Snowmobile (seen above with DigitalGlobe Founder and Executive Vice President Dr. Walter Scott) to transfer 100 PB of data into Amazon Simple Storage Service (Amazon S3).

Question 2: How much of your data has been labeled?

Several

Left: Dr. Walter Scott with the AWS Snowmobile that carried DigitalGlobe’s data to the cloud. Right: The AWS Snowmobile at DigitalGlobe’s headquarters in Westminster, CO, as seen from space by WorldView-3.[/caption]

It takes a tractor trailer truck to move our data. We are the first customer to use the AWS Snowmobile (seen above with DigitalGlobe Founder and Executive Vice President Dr. Walter Scott) to transfer 100 PB of data into Amazon Simple Storage Service (Amazon S3).

Question 2: How much of your data has been labeled?

Several  DigitalGlobe projects are working to deliver high-fidelity training data for anyone to use. SpaceNet, a deep learning data challenge sponsored by NVIDIA, DigitalGlobe and CosmiQ Works, provides a massive corpus of open source, labeled training data. We are also working with other partners to provide multi-object training datasets that allow the research community to provide more contextual learning in their algorithms. Finally, low-shot and one-shot learning are relatively new techniques where you train a high precision and recall network with less data; we are actively researching how to do this better so we can help our customers make mission-critical decisions. Our government partners are showing tremendous interest in doing more with less training data so low-shot learning and being able to augment real training data with synthetic data is a key research area for us.

Question 3: Where are your GPUs, what does your GPU stack look like and are you using them for training or inference?

We maximize GPU usage in both AWS and locally on servers located in our own data centers to train and iterate on our neural networks. To do any of this at scale, you must have access to a lot of computing power, so multiple options are necessary. Model training is computationally intensive and using the parallelization capability of a GPU shortens the training cycle immensely.

To train our deep learning models, we use a variety of different technologies. This includes harnessing the power of the crowd via our crowdsourcing platform, Tomnod. We leverage Tomnod to create new training samples of objects that can be found in satellite imagery and validate the results from our models. We are grateful for Tomnod because it provides the opportunity for humans to do some of the more strenuous labeling, such as object segmentation.

DigitalGlobe projects are working to deliver high-fidelity training data for anyone to use. SpaceNet, a deep learning data challenge sponsored by NVIDIA, DigitalGlobe and CosmiQ Works, provides a massive corpus of open source, labeled training data. We are also working with other partners to provide multi-object training datasets that allow the research community to provide more contextual learning in their algorithms. Finally, low-shot and one-shot learning are relatively new techniques where you train a high precision and recall network with less data; we are actively researching how to do this better so we can help our customers make mission-critical decisions. Our government partners are showing tremendous interest in doing more with less training data so low-shot learning and being able to augment real training data with synthetic data is a key research area for us.

Question 3: Where are your GPUs, what does your GPU stack look like and are you using them for training or inference?

We maximize GPU usage in both AWS and locally on servers located in our own data centers to train and iterate on our neural networks. To do any of this at scale, you must have access to a lot of computing power, so multiple options are necessary. Model training is computationally intensive and using the parallelization capability of a GPU shortens the training cycle immensely.

To train our deep learning models, we use a variety of different technologies. This includes harnessing the power of the crowd via our crowdsourcing platform, Tomnod. We leverage Tomnod to create new training samples of objects that can be found in satellite imagery and validate the results from our models. We are grateful for Tomnod because it provides the opportunity for humans to do some of the more strenuous labeling, such as object segmentation.

On the inference side, we developed a highly optimized Software Development Kit (SDK) called DeepCore that scales across as many GPUs and geographies as we need. DeepCore is written in modern C++ for speed and scalability with a fair amount of effort put into capturing performance metrics. There are even Python bindings coming soon to empower those data scientists who may not know C++! We deploy applications built on DeepCore within GBDX, as well as on other platforms within the government spaces, depending on the use case. GBDX is DigitalGlobe’s platform within AWS where we run our own algorithms at scale. It is actually a marketplace of consumers and producers where third parties can bring their own algorithms and data into GBDX as well.

Question 4: What kind of algorithms are you using?

We use a variety of algorithms including AlexNet, DetectNet, fully convolutional networks and Yolo9000, all trained on Caffe and TensorFlow with support for CNTK and DLIB in the works. This variety enables us to customize and ensemble the network to the source data, such as multispectral imagery or synthetic aperture radar (SAR). This allows for greater precision and better results from the neural network. We have achieved good results in the past few years and have even patented some of the methodologies:

On the inference side, we developed a highly optimized Software Development Kit (SDK) called DeepCore that scales across as many GPUs and geographies as we need. DeepCore is written in modern C++ for speed and scalability with a fair amount of effort put into capturing performance metrics. There are even Python bindings coming soon to empower those data scientists who may not know C++! We deploy applications built on DeepCore within GBDX, as well as on other platforms within the government spaces, depending on the use case. GBDX is DigitalGlobe’s platform within AWS where we run our own algorithms at scale. It is actually a marketplace of consumers and producers where third parties can bring their own algorithms and data into GBDX as well.

Question 4: What kind of algorithms are you using?

We use a variety of algorithms including AlexNet, DetectNet, fully convolutional networks and Yolo9000, all trained on Caffe and TensorFlow with support for CNTK and DLIB in the works. This variety enables us to customize and ensemble the network to the source data, such as multispectral imagery or synthetic aperture radar (SAR). This allows for greater precision and better results from the neural network. We have achieved good results in the past few years and have even patented some of the methodologies:

- Broad area geospatial object detection using autogenerated deep learning models

- Synthesizing training data for broad area geospatial object detection