First, let’s revisit why Cloud Optimized GeoTIFFs (COGs) are clever. In May 2016, a virtual file system interface for AWS S3 cloud storage was added to GDAL v2.1.0 to support HTTP range requests. This GDAL interface enables random access to sub-windows of imagery as needed. COG is a smart ordering of the bits representing an image file, which enable optimal random access to pixels.

Why does this matter? Satellite imagery is heavy, especially DigitalGlobe imagery—30 cm pixels add up fast. A single strip of imagery can be 20 GB and 40 GBs after you pansharpen it. Moving around chunks of data this big is a pain. With COG and range requests, you no longer need to read the whole 20-40 GB image. Instead, you read only the segment of the image you’re interested in.

These concepts open the door to countless potential innovations. The DigitalGlobe FLAME (Flexible Large Area Mosaic Engine) team was an early adopter of cloud-native storage. Today, the team leverages MapServer, COG and HTTP Range Requests to stream imagery directly out of cloud storage via OGC tile services, and sponsors developers, like Spatialys, to improve GDAL support for HTTP range requests.

COG and clever pyramiding of images allow for the minimal amount of data to be stored, clipped and rendered at any given mosaic zoom level. Large mosaics are then rapidly rendered on modest server hardware. See the results in action below:

First, let’s revisit why Cloud Optimized GeoTIFFs (COGs) are clever. In May 2016, a virtual file system interface for AWS S3 cloud storage was added to GDAL v2.1.0 to support HTTP range requests. This GDAL interface enables random access to sub-windows of imagery as needed. COG is a smart ordering of the bits representing an image file, which enable optimal random access to pixels.

Why does this matter? Satellite imagery is heavy, especially DigitalGlobe imagery—30 cm pixels add up fast. A single strip of imagery can be 20 GB and 40 GBs after you pansharpen it. Moving around chunks of data this big is a pain. With COG and range requests, you no longer need to read the whole 20-40 GB image. Instead, you read only the segment of the image you’re interested in.

These concepts open the door to countless potential innovations. The DigitalGlobe FLAME (Flexible Large Area Mosaic Engine) team was an early adopter of cloud-native storage. Today, the team leverages MapServer, COG and HTTP Range Requests to stream imagery directly out of cloud storage via OGC tile services, and sponsors developers, like Spatialys, to improve GDAL support for HTTP range requests.

COG and clever pyramiding of images allow for the minimal amount of data to be stored, clipped and rendered at any given mosaic zoom level. Large mosaics are then rapidly rendered on modest server hardware. See the results in action below:

GBDX has been tackling similar problems, managing continental scale analytics across our satellite imagery. Moving big strips of data is hard for viewing and computing. While we are most familiar with viewing satellite imagery, we know the future demands derived data products and for that, efficient compute is critical.

Our Raster Data Access (RDA, yes we love acronyms) team found a way to make satellite imagery data more consumable by breaking strips into chips. And using COG, we can perform range requests to programmatically create chips from strips.

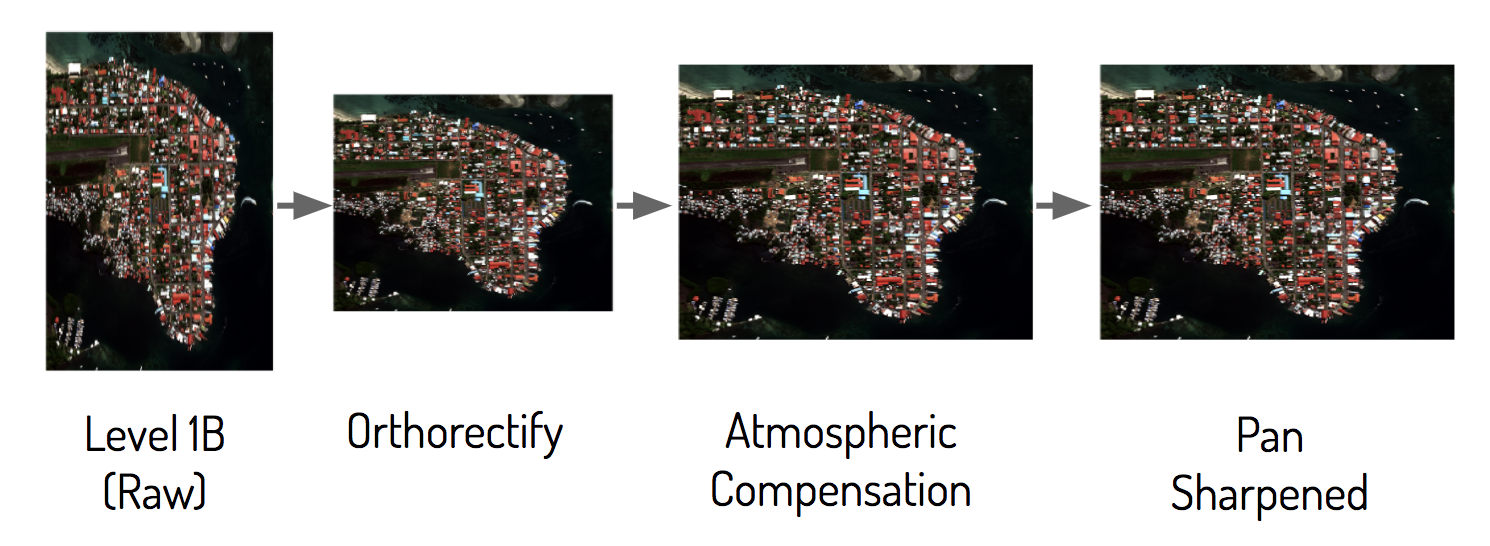

What’s really exciting is now that we have manageable chips, we can perform cloud-native geospatial processing with them. Typically users interact with satellite imagery via a Tile Map Service (TMS) where the data has been pre-processed (e.g., Mapbox, Google Maps, Apple Maps). But for performing analytics, pre-processed satellite imagery creates a massive logistics problem. Should the imagery be orthorectified and/or atmospherically compensated? Pansharpened? There are simply too many possible combinations a customer might need.

GBDX has been tackling similar problems, managing continental scale analytics across our satellite imagery. Moving big strips of data is hard for viewing and computing. While we are most familiar with viewing satellite imagery, we know the future demands derived data products and for that, efficient compute is critical.

Our Raster Data Access (RDA, yes we love acronyms) team found a way to make satellite imagery data more consumable by breaking strips into chips. And using COG, we can perform range requests to programmatically create chips from strips.

What’s really exciting is now that we have manageable chips, we can perform cloud-native geospatial processing with them. Typically users interact with satellite imagery via a Tile Map Service (TMS) where the data has been pre-processed (e.g., Mapbox, Google Maps, Apple Maps). But for performing analytics, pre-processed satellite imagery creates a massive logistics problem. Should the imagery be orthorectified and/or atmospherically compensated? Pansharpened? There are simply too many possible combinations a customer might need.

So, how do we deliver access to all potential imagery derivatives? Can we provide flexibility while still enabling the simple experience and pattern of a TMS?

Historically, providing access to raw imagery and its derivatives was challenging, especially to non-image scientists. The pattern typically involved downloading heavy datasets locally, or blindly writing algorithms to be run remotely. But now, RDA provides users with deferred graphs that can dynamically generate any imagery derivatives necessary.

On the server side, where the imagery lives, we have a collection of operators (e.g., orthorectification, atmospheric compensation, pansharpening) plus a graph engine to link operators, which then transform data into a clean set of imagery chips. Check out our RDA conceptual workflow below:

So, how do we deliver access to all potential imagery derivatives? Can we provide flexibility while still enabling the simple experience and pattern of a TMS?

Historically, providing access to raw imagery and its derivatives was challenging, especially to non-image scientists. The pattern typically involved downloading heavy datasets locally, or blindly writing algorithms to be run remotely. But now, RDA provides users with deferred graphs that can dynamically generate any imagery derivatives necessary.

On the server side, where the imagery lives, we have a collection of operators (e.g., orthorectification, atmospheric compensation, pansharpening) plus a graph engine to link operators, which then transform data into a clean set of imagery chips. Check out our RDA conceptual workflow below:

With a standard like COG for fast random access storage, we can stream these chips of imagery to clients for analysis and visualization. To create a facile interface for exploration and analysis, we’ve built GBDX Notebooks on top of RDA.

One key challenge of creating a productive and enjoyable experience analyzing satellite imagery is the time it takes to move imagery and run analytical compute against it. These challenges are often compounded by the need for specialist and proprietary software to perform analysis. RDA allows us to move imagery quickly, and GBDX Notebooks allows us to compute against it with equal velocity.

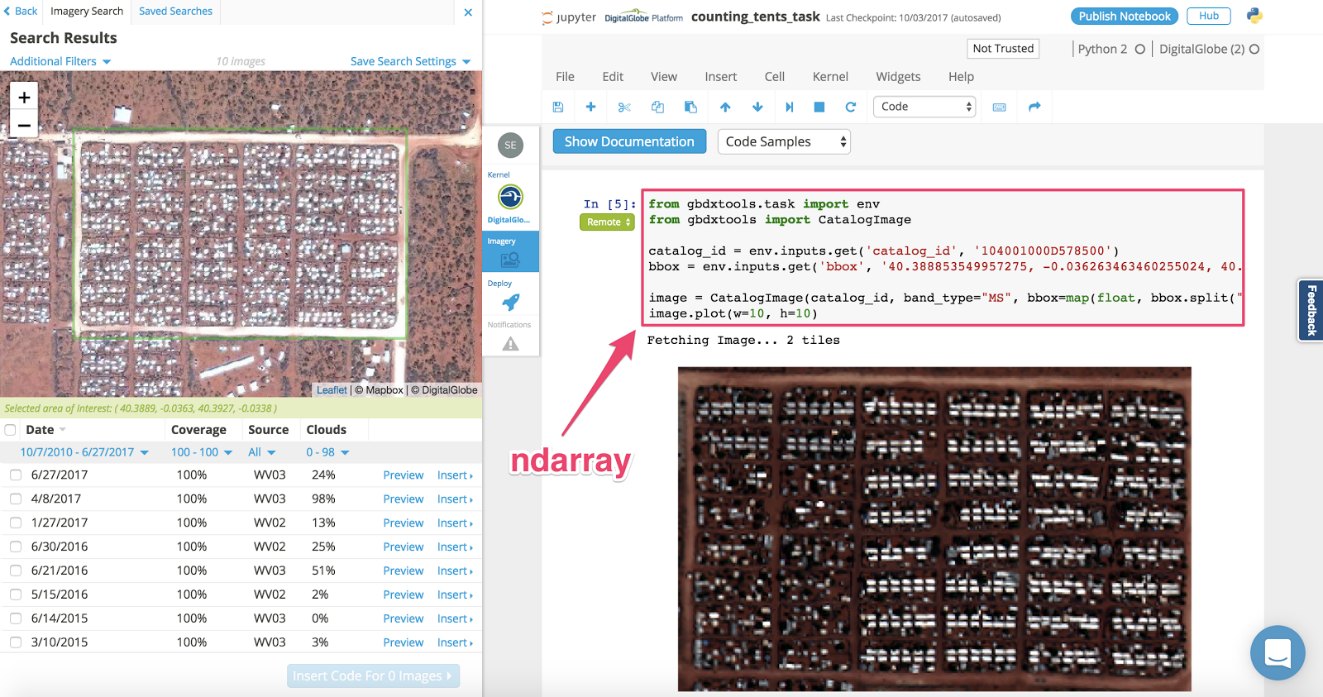

Enabling greater accessibility, GBDX Notebooks is built on top of Jupyter, bringing satellite imagery to an environment where users are already engaged and working. We’ve also developed simple data access patterns that enable users to focus on creativity and analysis, instead of data structuring and cleaning.

GBDX Notebooks features simple imagery discovery interface and capability to stream selected imagery to the notebook as a ndarray.

With a standard like COG for fast random access storage, we can stream these chips of imagery to clients for analysis and visualization. To create a facile interface for exploration and analysis, we’ve built GBDX Notebooks on top of RDA.

One key challenge of creating a productive and enjoyable experience analyzing satellite imagery is the time it takes to move imagery and run analytical compute against it. These challenges are often compounded by the need for specialist and proprietary software to perform analysis. RDA allows us to move imagery quickly, and GBDX Notebooks allows us to compute against it with equal velocity.

Enabling greater accessibility, GBDX Notebooks is built on top of Jupyter, bringing satellite imagery to an environment where users are already engaged and working. We’ve also developed simple data access patterns that enable users to focus on creativity and analysis, instead of data structuring and cleaning.

GBDX Notebooks features simple imagery discovery interface and capability to stream selected imagery to the notebook as a ndarray.

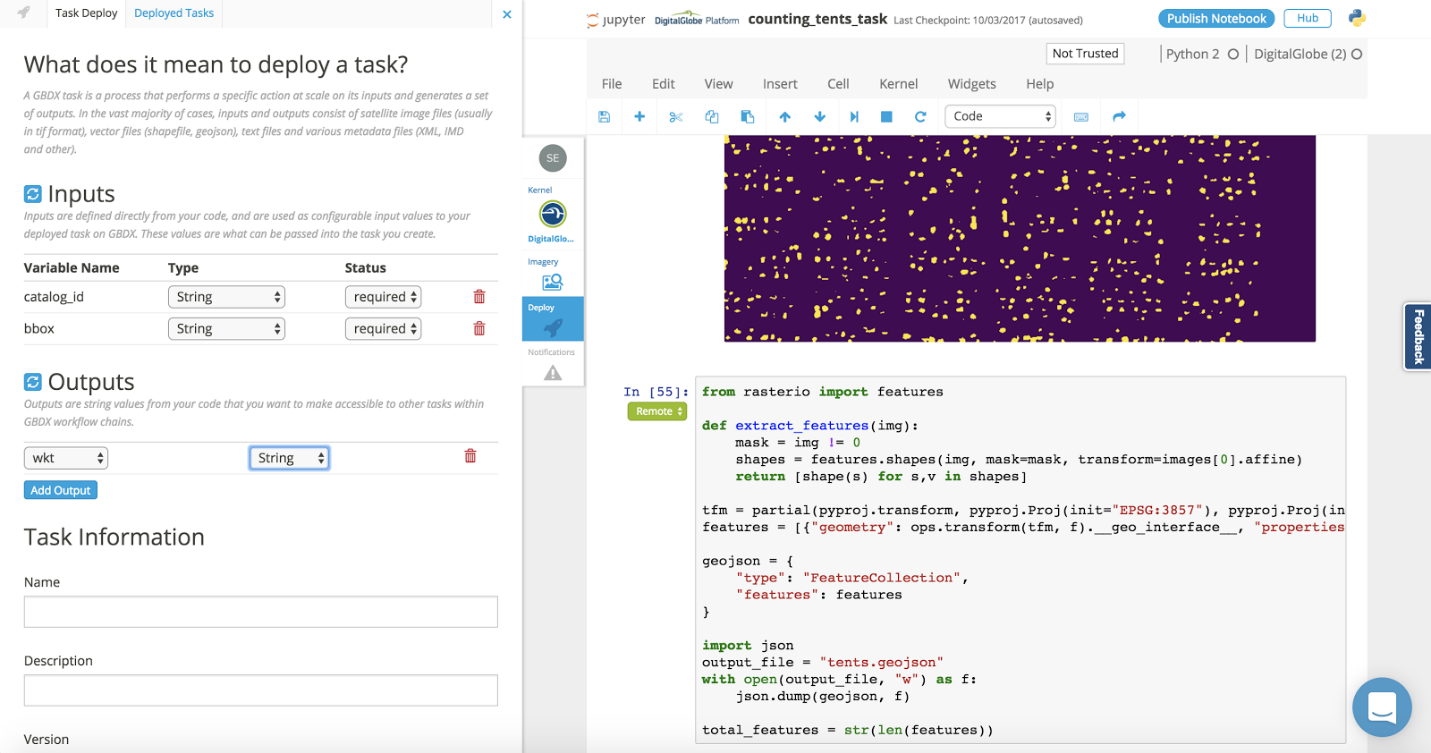

Now we can immediately start writing analytical code against the imagery and have results rendered directly in the notebook. And by sending the algorithms to the data, we accelerate the analytics process. When executing cells in GBDX Notebooks against imagery, the code is pushed to AWS (where the data resides) for processing. Then, results are delivered back into the notebook.

For seamless integration, we implemented Dask to enable parallel processing within the notebook. For instance, we might want to perform a spatial autocorrelation to detect tents in the image above, quickly testing different thresholds on residuals, and then interact with results to tune the model:

Now we can immediately start writing analytical code against the imagery and have results rendered directly in the notebook. And by sending the algorithms to the data, we accelerate the analytics process. When executing cells in GBDX Notebooks against imagery, the code is pushed to AWS (where the data resides) for processing. Then, results are delivered back into the notebook.

For seamless integration, we implemented Dask to enable parallel processing within the notebook. For instance, we might want to perform a spatial autocorrelation to detect tents in the image above, quickly testing different thresholds on residuals, and then interact with results to tune the model:

Once we are satisfied with a model, we can dockerize the notebook and task GBDX to run it at scale across large swaths of geography. The results of the analysis are then returned to the notebook for visualization and further analysis or iteration.

Once we are satisfied with a model, we can dockerize the notebook and task GBDX to run it at scale across large swaths of geography. The results of the analysis are then returned to the notebook for visualization and further analysis or iteration.

We are super excited about the potential of Cloud Native Geospatial. The concepts behind COG are truly opening the door to an exciting new chapter for the geospatial industry. To get the ball rolling, we’ve converted our IKONOS collection of imagery to COG; as well as NOAA’s night lights data (a.k.a. Visible Infrared Imaging Radiometer Suite). The VIIRS night lights data will be made available as COG-based mosaics. We’ll also provide access to the raw data including GeoJSON of the metadata for cloud-based analytics. We’ll be enabling creative ways to interact with the data for customers and the community in the near future. We look forward to continuing our work to embrace the COG standard, and push the boundaries of what is possible with satellite imagery and geospatial data.

We are super excited about the potential of Cloud Native Geospatial. The concepts behind COG are truly opening the door to an exciting new chapter for the geospatial industry. To get the ball rolling, we’ve converted our IKONOS collection of imagery to COG; as well as NOAA’s night lights data (a.k.a. Visible Infrared Imaging Radiometer Suite). The VIIRS night lights data will be made available as COG-based mosaics. We’ll also provide access to the raw data including GeoJSON of the metadata for cloud-based analytics. We’ll be enabling creative ways to interact with the data for customers and the community in the near future. We look forward to continuing our work to embrace the COG standard, and push the boundaries of what is possible with satellite imagery and geospatial data.